NOTE: This article has been updated and moved to the Codeless Platforms Documentation Portal.

If you are seeing this message and have not been redirected, click: How to Design a “Chunked” Task to Process a Large Amount of Rows Over Multiple Task Runs.

<!–

Introduction

You may find that running tasks that process high volumes of rows may results in multiple errors due to the large amount of processing.

This article describes how to design such a task, but where the high number of rows processed is “chunked” into multiple task runs.

Step 1: Create a Table to Log Task Events

To prevent the task from processing the same row, we need to create a database table to mark when rows have been processed. It also has the benefit of allowing the task to continue processing the next chunk from where the last chunk ended.

- If you don’t have an instance of SQL Server installed on the BPA Platfomr server, download and install a local SQL Server Express instance.

- If one doesn’t already exist, create a new database.

- In the database, create a table — QUEUE — with the following fields:

| Field Name | Data Type | Comments |

|---|---|---|

| EVENT_ID | varcher (200), PRIMARY KEY, NOT NULL | Primary key of the QUEUE table. Populated from the primary key of the external system. A data type of If the external system is known, the field type should be aligned with that of the external system. |

| TASK_ID | int | The task ID |

| TASK_STATUS | bit, NOTNULL | Unprocessed = 0, Processed = 1 |

Step 2: Create Stored Procedures to Maintain the Queue Table

In SQL Server or SQL Express, create the following two stored procedures; the procedures are used to INSERT and UPDATE the QUEUE table:

SQL Script for the INSERT Procedure

CREATE PROCEDURE INSERT_EVENT @PRIMARY_KEY varchar(200), @TASK_ID int AS BEGIN INSERT INTO QUEUE (EVENT_ID, TASK_ID, TASK_STATUS) VALUES ( @PRIMARY_KEY, @TASK_ID, 0 -- Unprocessed ) END GO

SQL Script for the UPDATE Procedure

CREATE PROCEDURE UPDATE_EVENT @PRIMARY_KEY varchar(200) AS BEGIN UPDATE QUEUE SET TASK_STATUS = 1 –- Processed WHERE EVENT_ID = @PRIMARY_KEY END GO

Step 3: Build the Chunked Task

The mandatory tools needed to build the chunked task are:

- Call Stored Procedure (OLEDB)

- Call Task

- Decision

All other tools used in the task are dependent on the job requirements.

Call Stored Procedure (OLEDB)

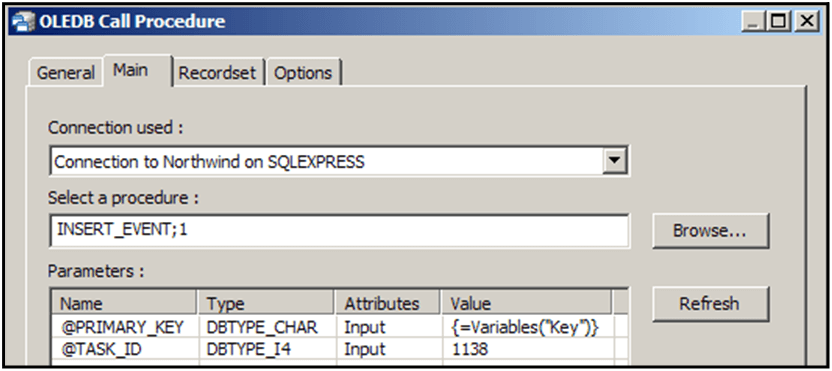

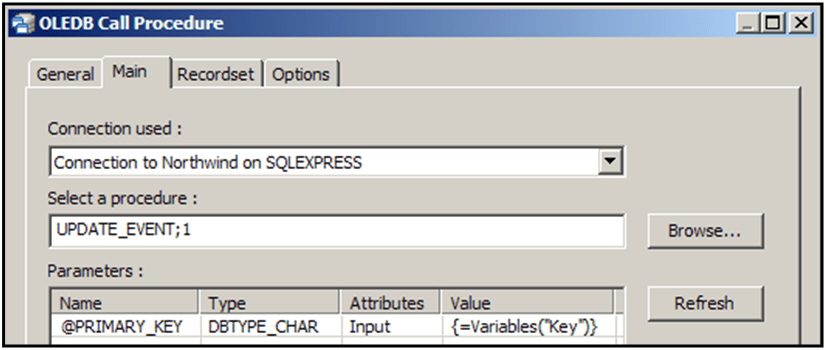

Call Stored Procedure (OLEDB) steps are used to call the INSERT_EVENT and UPDATE_EVENT procedures created in step 2. These ensure the task can “keep track” of processed rows.

A task variable is required which holds the key value for the row currently being processed — Typically, this is the primary key for the actual data row that becomes the EVENT_ID value in the QUEUE table.

The first step inserts an unprocessed row into the QUEUE table using the INSERT_EVENT stored procedure:

The second step updates the TASK_STATUS field in the QUEUE table to mark it as processed. This step uses the UPDATE_EVENT stored procedure:

Call Task

The Call Task step is used to run the same task again. A few things to note when setting up the Call Task step:

- When setting up the global configuration:

- Create a username and password specifically for the step to use when running the task — It is recommended you don’t use the same username and password that is used to log into the BPA Platform client as this could lock up the task run

- When setting up the step configuration:

- Data sources and object mapping are not required

- Choose the chunked task as the Object and Queue as the Operation in the Mapping tab

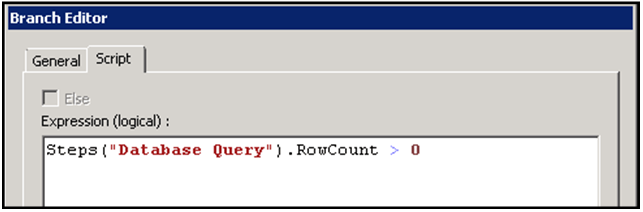

Decision

The Decision step is used to check the number of rows processed. The task should only continue where RowCount > 0 (that is, there are rows to process). This prevents the task from going into an endless loop.

RowCount property for the task step that generates the initial rows into the Decision step’s Branch Editor:

Example Implementation

Let’s take as an example, a BPA Platform task which monitors a sales order system. It obtains a list of all new orders for the day and emails out each order as a PDF report:

- A Database Query (ODBC or OLEDB) task step produces 1000 rows

- A Crystal report is required for each row returned

- Each task run processes 50 rows each time until all 1000 rows have their report generated

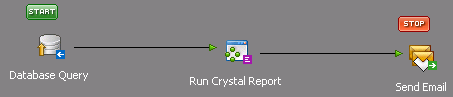

Task Design Before Implementing Task Chunking

The following shows the task design before the chunking has been applied:

The task performs the following actions:

- Database Query — Returns a recordset

- Run Crystal Report — Generates a PDF report from the recordset data; one report for each row

- Send Email (SMTP) — Sends an email containing a report

Task Design After Implementing Task Chunking

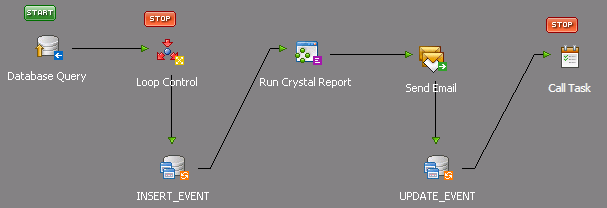

The following shows the task design after the chunking has been applied:

where:

Database Query Step

Returns a recordset that produces a restricted amount of rows. The SQL in the query step has been amended to JOIN the Orders table to the new QUEUE table with a WHERE clause of TASK_STATUS = 0, ensuring that it only picks up the unprocessed transactions:

SELECT TOP 50 OrderID OrderDate CustomerName FROM [DB_Name].dbo.SalesOrders INNER JOIN [DB_Name].dbo.QUEUE ON [DB_Name].dbo.SalesOrders.OrderID = [DB_Name].dbo.QUEUE.EVENT_ID WHERE [DB_Name].dbo.QUEUE.TASK_STATUS = 0

Replace [DB_Name] with the parent database name.

Loop Control Step

This is a Decision step that checks the RowCount property of the Database Query step.

INSERT_EVENT Step

This is a Call Stored Procedure (OLEDB) step that inserts an unprocessed row into the QUEUE table using the INSERT_EVENT stored procedure created in step 2.

Run Crystal Report Step

Using the Run Crystal Report tool, this step generates PDF reports from the recordset data — one for each row.

Send Email Step

Using the Send Email (SMTP) tool, this step then sends an email with the PDF report attached.

UPDATE_EVENT Step

Another Call Stored Procedure (OLEDB) step updates the TASK_STATUS field in the QUEUE table to mark it as processed. This step uses the UPDATE_EVENT stored procedure created in step 2.

Call Task Step

The Call Task step loops the task back on itself to retrieve and process the next 50 records.